Open Source on Azure: Dive into Drupal #2

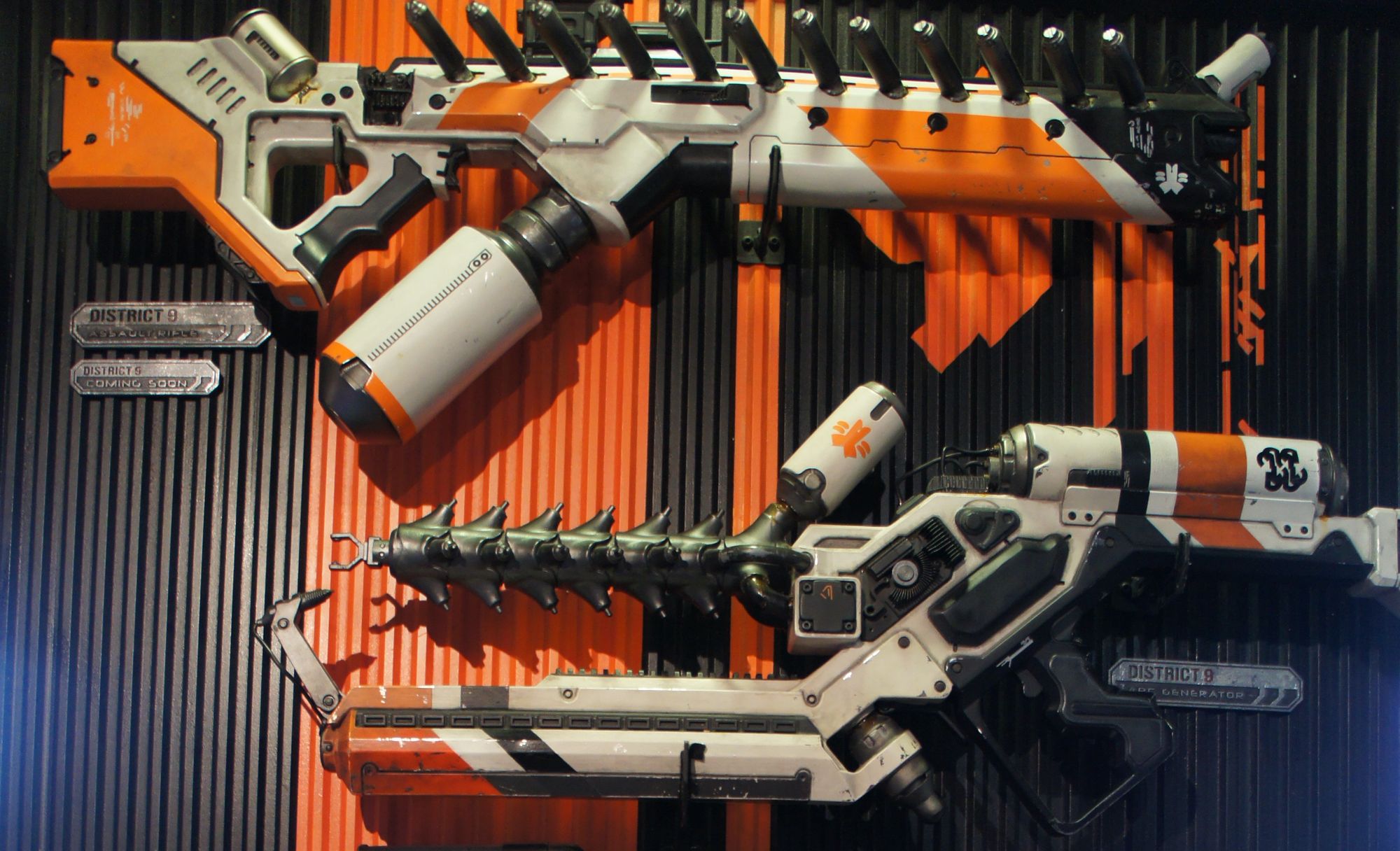

Drupal & DevOps: Choose your weapons

This is the 2nd of a 3 part article on exploring Azure, Drupal & DevOps through a new website project for the Hampton Hill Theatre.

This post follows on from Part 1 Product backlog: The kick off where we went into all the features Azure Boards has to get us started with Agile & Scrum.

We'll now get more into the tools needed to start Drupal development, using version control and hosting on Azure PaaS.

Move with the times

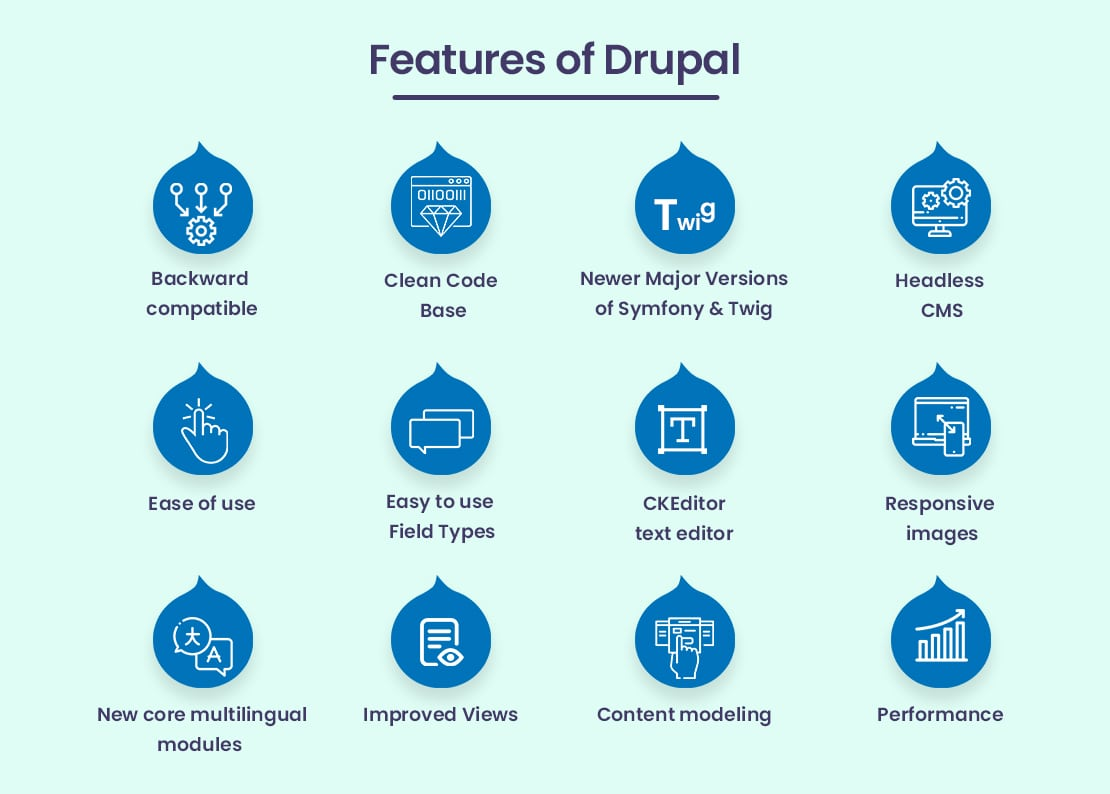

Drupal 9 builds on all of the innovation of Drupal 8 and brings more continuous feature improvements every six months

The move from D7 to D9 brings a whole host of new features to the table but with great power brings a great...amount of moving parts!

Web Skills

So to build all the feature above a Drupal developer ideally needs to be skilled across the full stack (on larger teams this is covered by separate people).

In the case of this project we have:

Front end

- Bootstrap 4.0 - responsive & mobile first framework

- HTML, CSS/SCSS & JavaScript - the bread & butter of front end dev

- NPM & WebPack - JS package management, compile & bundle the front end

- Browser Sync - automatic front end testing

- UX & Design - converting designs to Drupal themes

Back end

- PHP - open source scripting & programming language

- Twig - PHP templating, interfacing the front & back end

- Composer - PHP package management, compile & bundle the back end

- Symfony - reusable PHP components

- Drush - command line shell for admin & automation tasks

- YML - configuration management for modules and settings

- Database - MySQL, queries and export/import

As we can see that's a lot to cover and can lead to chats like below...

Tools of the trade

So to get started the first one up for any developer is the IDE (integrated development environment) - where you do your coding & alot more.

VS Code was an easy choice. You can customise, install extensions, debug, preview, integrated command line and always updated. Pretty much all you need from 1 tool.

As they say:

Free. Built on open source. Runs everywhere.

Next we need a local Drupal environment to replicate where the website will live in the cloud.

The term 'local' means working on your own PC/Mac (in this case the latter). 'Dev' usually means a development server, 'Test' is used for larger projects and 'Prod' or 'Live' is the actual site your user's visit. For this project we used Local, Dev and Prod.

Previously I had always worked with MAMP (Mac, Apache, MySQL, PHP) which is a great tool to configure a local server but it was high time to switch to using Docker Containers.

Dockerizing Drupal

Using Docker is a now a standard approach for web development.

Docker is great for local development environments because of the small footprint and fast start-up times. More open-source projects are packaging their stacks as Docker images to speed up the installation & collaboration - Drupal.org

Docker images are software packages that contain everything you need to run an app (code, tools, runtime etc). When you run the image it becomes a Docker container.

Looking at the ship below, the sea would be your OS, the ship your docker engine and all the containers would be the apps/services running on it.

For Drupal there are a lot of choices; Lando, Docker4Drupal... I chose DDEV, it's an open-source tool that uses Docker to build local dev PHP environments. It has a powerful CLI to run admin and Drush, Composer commands.

Spin it up with DDEV

It was very easy to spin up a local D9 environment on Mac OS, this excellent article on Digital Ocean shows the way.

You need to configure the environment to match production, the config.yaml file below shows we want PHP, Apache and MySQL in our local container stack.

name: hamptonhilltheatre

type: drupal9

docroot: web

php_version: "7.4"

webserver_type: apache-fpm

mysql_version: "5.7"

Then we set up the scaffolding and folders for your D9 project, with /.ddev holding the config and the Drupal site in /web.

ddev config --project-type=drupal9 --docroot=web --create-docroot

Next we pull the images from Docker Hub and build all the containers (web/db servers). All by this one command:

ddev start

At this point we have the scaffolding but not the main house so we finish off the installation using Composer (installs core modules and themes):

ddev composer create "drupal/recommended-project"

Once this is done the local Drupal site can be accessed and configured through the browser or Drush.

So this shows from a few commands how fast it can be to get a local environment up through using Containers with no specific set up for an individual PC/Mac.

We can also check the security of the containers being downloaded from Docker Hub through image scanning with a tool like Snyk.

Git it under control

Now the Drupal site is up and running local, we can start the front and back end development. But all this slick code needs to go under Source/Version control.

Git has been the de-facto source control standard for a while now, so there wasn't really a question of looking elsewhere. But the actual tool to manage the Git repositories needed confirming:

The top 4 Git tools are:

- GitHub ~56 million users, biggest open-source community & Microsoft focus

- BitBucket ~10 million users, lots of 3rd party integrations

- GitLab ~30 million users, full DevOps tool with monitoring & security

- Azure DevOps < 1 million users?, full DevOps tool and Azure integration

As mentioned in Part 1 we had already started with Azure Boards to set up the backlog, this is a feature of Azure Dev Ops (ADO).

ADO has Azure Repos which offer unlimited, private Git repos, full integration with the Boards and the CI/CD pipelines (which we'll see later). So it made sense to keep the front & backend code in the same tool.

If this was a purely open source project with lots of external collaborators then either GitHub or GitLab would have been the logical choice. GitHub is the direction of travel Microsoft are taking, so watch this space!

Send it to the cloud

So we now have the backlog, local dev tools and Git repo all set up. The site is being developed and works fine on local. The code is being pushed up to Azure repos.

That's great.

But it needs somewhere to live when live.

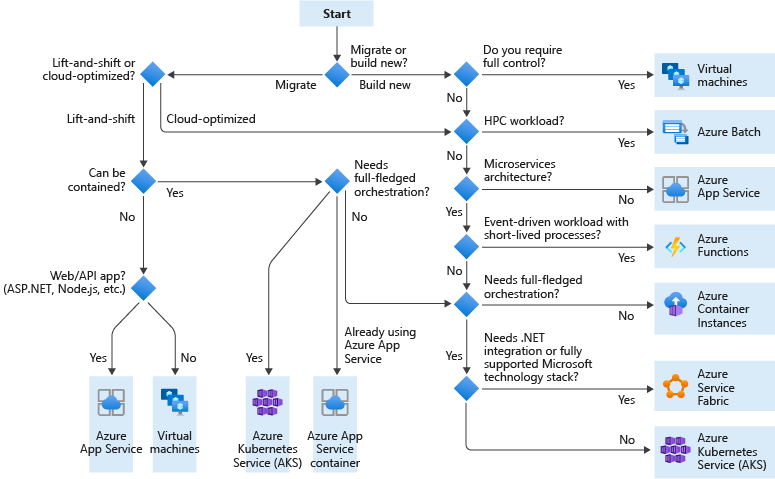

As mentioned in Part 1 we had already chosen Azure as the cloud platform, the web & compute options are vast. Following the decision tree below (didn't require VM or Microservices) helped to confirm what we needed for this small site is the Azure App Service.

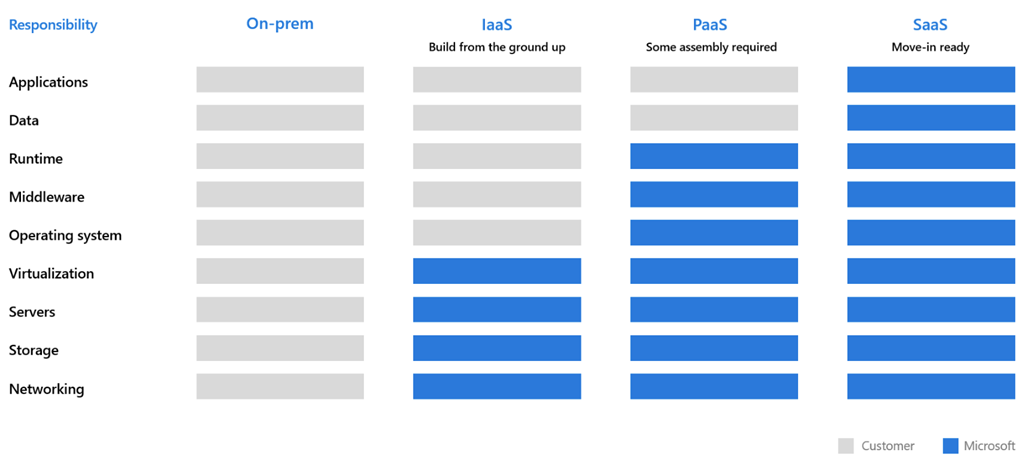

The App Service is a Platform as a Service (PaaS) for hosting web apps, APIs and mobile back ends. A super quick explanation of what a PaaS is below, basically bring your app code and data, Azure will do the rest.

The App Service plan hosts the apps which run on all the underlying VM instances, the cost depends on the number of instances running. The built in load-balancer automatically distributes traffic across instances.

You can choose from a selection of Microsoft-provided runtime stacks (.NET, .NET Core, Java, Ruby, Node.js, PHP or Python), these are updated to newer versions automatically.

As we are running Drupal we needed to tailor the plan to match the DDEV web container used earlier:

- Operating System = Linux

- Runtime = PHP

- Region = UK South (closest to end users)

- Number of VM instances = 2

- Pricing tier = Basic

Once the plan is ready we can then create a separate Dev and Production apps ready for deployment.

Azure provides a huge amount of features to tinker with including logging, monitoring, alerts, custom domains, advanced tools via Kudu and...🆓 SSL certificates which are a real cost and time saver!

Setting this up is straightforward in the Azure portal or via Azure CLI and you'll see in the next post how this can also be done using Infrastructure as Code with Bicep.

Where's the data?

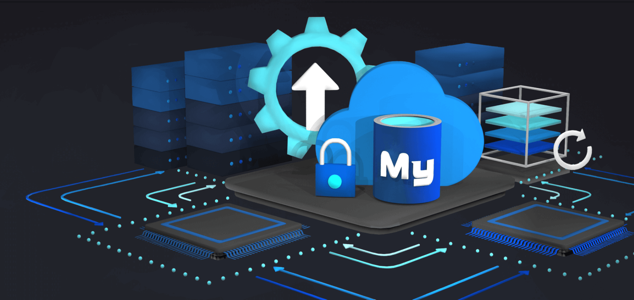

OK, now we have the App services we need a cloud database (again to match the DDEV database container).

Azure Database for MySQL is a fully managed, scalable database service that would suit our needs.

I already had an Linux Azure VM, running Ubuntu and MySQL so this had capacity to act as the shared DB server. As its hosted in UK South the latency would be minimal and extra operational costs would be negligible.

Multi-cloud anyone?

Although our focus is Azure it's worth a quick mention on AWS. Aside from specialist managed Drupal hosting (Acquia, Pantheon etc) AWS is a very popular choice.

A matching cloud stack on Amazon would likely require Elastic Beanstalk (Azure App service), Relational Database Service (Azure MySQL) and Elastic File System (Azure storage). So definitely too high a learning curve to take on for this project.

As multi-cloud is in the ascendency it's always worth seeing what else is out there, especially for scaled up global services requiring high availability and geo-replication across providers (Azure, AWS & GCP).

Wrap up

That's it for part 2!

We've covered alot of ground to get to this point.

We now have all the tools needed to build out the Drupal site, code under version control and a cloud App & Database service ready to host the final product.

Please check out part 3 where we finish off this series looking at Infra as Code and Deployments in CI-CD: Rinse & Repeat into Azure - see you then!